In recent years, as we navigate the age of artificial intelligence, a plethora of new terms have entered the lexicon. Some older concepts have gained renewed attention, one of which is the “Neural Network.” This term is becoming increasingly prevalent, and its relevance is likely to grow.

Artificial intelligence systems, such as automatic translation and speech recognition, have surged in prominence over the last decade, largely due to a technique known as “deep learning.” This approach is essentially a modern iteration of the “neural network,” a concept that has been around for over 70 years. To demystify what a neural network is, let’s delve into its intricacies.

At its core, a neural network enables a computer to learn specific tasks by analyzing training data (machine learning). This mimics how the human brain processes data. Typically, examples are manually labeled in advance. For instance, an object recognition system may be trained with thousands of labeled images depicting objects like phones or soccer balls, allowing it to identify visual patterns associated with these labels.

Elaborating further, a neural network is a machine learning model that emulates human brain function, employing processes that mimic the interaction of biological neurons to discern facts, evaluate options, and draw conclusions. These networks, sometimes called artificial neural networks (ANNs) or simulated neural networks (SNNs), are a subset of machine learning and central to deep learning models.

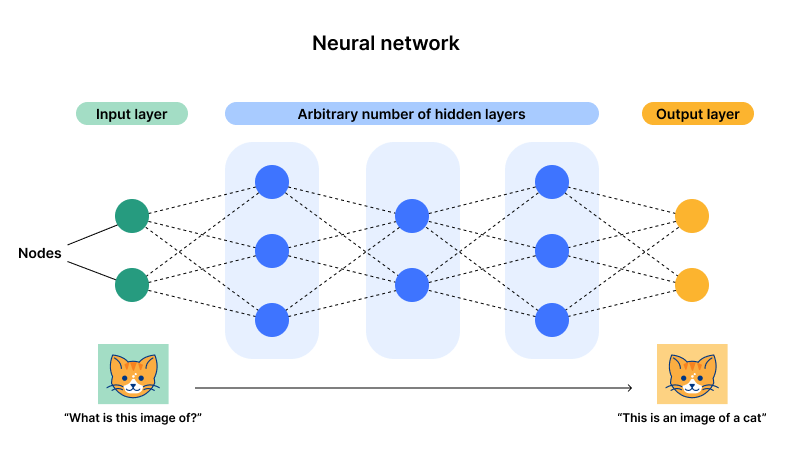

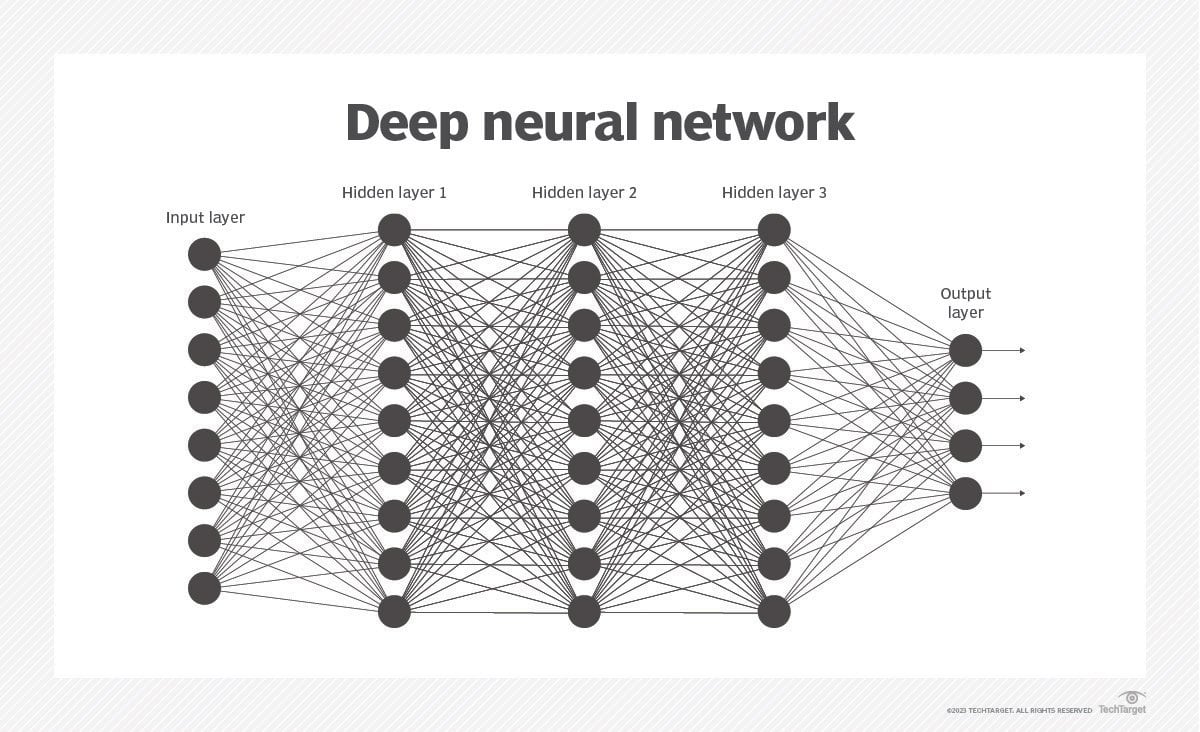

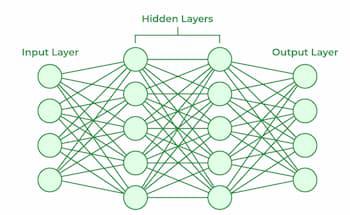

Each neural network consists of layers of nodes, or artificial neurons, arranged in an input layer, one or more hidden layers, and an output layer. Each node is interconnected and has an associated weight and threshold. If a node’s output surpasses the threshold, it activates and transmits data to the next network layer. Otherwise, the data transmission halts.

Neural networks rely on training data to enhance their accuracy over time. Once fine-tuned, they become potent tools in computer science and artificial intelligence, facilitating rapid data classification and clustering. A notable example of neural network application is Google’s search algorithm.

In simple terms, these networks consist of algorithms designed to recognize patterns and relationships in data, akin to human cognitive processes. Fundamentally, a neural network comprises neurons, the basic units resembling brain cells. These neurons receive inputs, process them, and yield an output. Neurons are organized into layers: an Input Layer receiving inputs, several Hidden Layers processing data, and an Output Layer delivering final predictions or decisions.

The adjustable parameters within neurons are weights and biases. As the network learns, these parameters are fine-tuned to determine the input signal strength. This tuning process is akin to the network’s evolving knowledge base. Certain configurations, known as hyperparameters, can be modified before training, influencing factors like learning speed and training duration, akin to optimizing system performance.

During training, the neural network is exposed to data, makes predictions based on existing knowledge (weights and biases), and evaluates prediction accuracy. This evaluation employs a loss function, the network’s “scorekeeper.” After making predictions, the loss function calculates the deviation from actual results. The primary training objective is minimizing errors.

Backpropagation plays a pivotal role in the learning process. Once errors or losses are identified, backpropagation adjusts weights and biases to reduce these errors. It functions as a feedback mechanism, identifying neurons contributing to errors and refining them for improved future predictions.

Techniques such as “gradient descent” efficiently adjust weights and biases. Gradient descent is a methodical approach to reaching the lowest point in incremental steps. Imagine navigating rough terrain, aiming to find the lowest point. The path followed, guided by gradient descent, leads to ever-lower points.

Lastly, a crucial component of neural networks is the activation function. This function decides whether to activate a neuron based on a weighted sum of its inputs and a bias.

Neural networks excel at learning and identifying patterns directly from data without predefined rules. These networks comprise several fundamental components:

Learning in neural networks involves a structured three-stage process:

Neural networks were first proposed in 1944 by Warren McCullough and Walter Pitts (University of Chicago researchers), who later joined MIT in 1952. Initially, neural networks were a significant research area in neuroscience and computer science until 1969. However, the field waned after MIT mathematicians Marvin Minsky and Seymour Papert questioned its viability in 1969. The technique saw a resurgence in the 1980s, waned in the early 2000s, and has recently thrived due to GPU advancements.

The history of neural networks extends further back than many realize. The notion of a “thinking machine” dates to ancient Greece. However, let’s explore some pivotal events in recent history:

Various neural network types are designed for specific tasks and applications:

Image recognition was among the first successful neural network applications. However, their utility has expanded rapidly to include:

Deep learning, a subset of machine learning, teaches computers to perform tasks by learning from examples, akin to human cognitive processes. But how can computers learn? By feeding them extensive datasets. A child learns about the world through senses like sight, hearing, and smell. Similarly, algorithms process varied data, such as visual and audio, to mimic human learning.

Deep learning employs neural networks to simulate human brain functionality, teaching computers to perform classification tasks and recognize patterns in photos, text, audio, and other data types. They automate tasks like image identification or audio transcription, typically requiring human intelligence.

The human brain comprises millions of interconnected neurons collaborating to process information. Deep learning involves neural networks with multiple software node layers working together, trained using large labeled datasets and neural network architectures.

In essence, deep learning enables computers to learn by example. For instance, imagine teaching a toddler what a dog is. By pointing to objects and saying “dog,” the toddler learns to identify dogs through parental feedback. Similarly, a computer learns to recognize a “car” by analyzing numerous images, identifying common patterns independently. This encapsulates deep learning.

In technical terms, deep learning utilizes neural networks, inspired by the human brain, comprising interconnected node layers. More layers render the network “deeper,” enabling it to learn intricate features and execute sophisticated tasks.

While all deep learning models are neural networks, not all neural networks qualify as deep learning. Deep learning refers to neural networks with three or more layers. These networks strive to emulate human brain behavior, facilitating learning from extensive data. Although a single-layer network can approximate predictions, additional hidden layers enhance accuracy.

The introduction of the Neural Processing Unit (NPU) accelerates artificial intelligence tasks. Apple has integrated NPUs in its chips for years, a trend gaining momentum as AI processes and tools become widespread.

What is an NPU?

Essentially, an NPU is a specialized processor executing machine learning algorithms, unlike traditional CPUs and GPUs. NPUs excel at processing large data volumes in parallel, facilitating tasks like image recognition and natural language processing. When integrated with GPUs, NPUs can handle specific tasks such as object detection or image acceleration.

Why do we need an NPU?

AI tools demand dedicated processing power, varying by industry, use case, and software. With productive AI use cases on the rise, a revamped computational architecture tailored for AI is essential. Beyond the CPU and GPU, NPUs are designed specifically for AI tasks. Pairing an NPU with the appropriate processor enables advanced AI experiences, optimizing application performance and efficiency while reducing power consumption and enhancing battery life.

SİGORTA

1 saat önceSİGORTA

19 saat önceBİLGİ

3 gün önceSİGORTA

4 gün önceSİGORTA

7 gün önceSİGORTA

12 gün önceSİGORTA

14 gün önceSİGORTA

14 gün önceSİGORTA

15 gün önceSİGORTA

18 gün önce 1

DJI Mini 5: A Leap Forward in Drone Technology

20186 kez okundu

1

DJI Mini 5: A Leap Forward in Drone Technology

20186 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

14196 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

14196 kez okundu

3

7 Essential Foods for Optimal Brain Health

13040 kez okundu

3

7 Essential Foods for Optimal Brain Health

13040 kez okundu

4

Elon Musk’s Father: “Admiring Putin is Only Natural”

12897 kez okundu

4

Elon Musk’s Father: “Admiring Putin is Only Natural”

12897 kez okundu

5

Minnesota’s Proposed Lifeline Auto Insurance Program

10762 kez okundu

5

Minnesota’s Proposed Lifeline Auto Insurance Program

10762 kez okundu

Sigorta Güncel Sigorta Şikayet Güvence Haber Hasar Onarım Insurance News Ajans Sigorta Sigorta Kampanya Sigorta Ajansı Sigorta Sondakika Insurance News