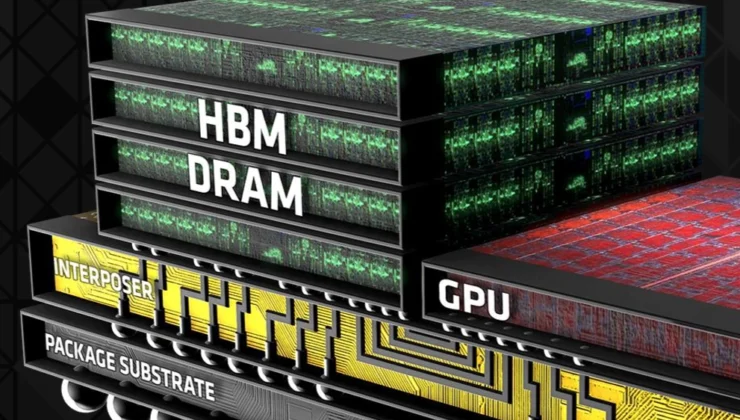

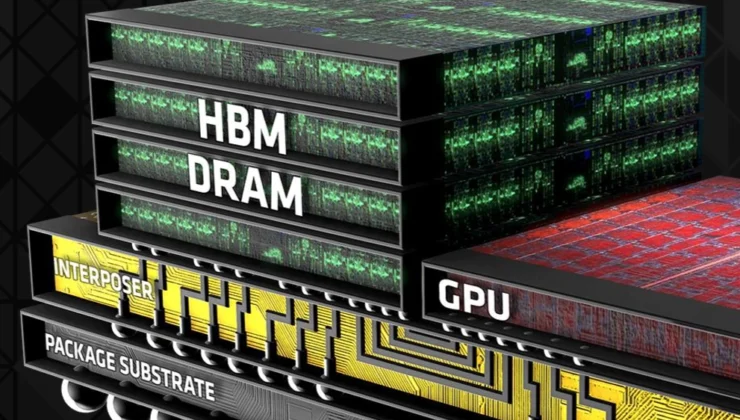

JEDEC has officially launched the High Bandwidth Memory 4 (HBM4) standard, codenamed JESD238, a significant advancement in memory technology designed to meet the escalating demands of AI workloads, high-performance computing (HPC), and sophisticated data center environments. This new standard introduces substantial architectural changes and interface upgrades to boost memory bandwidth, capacity, and efficiency.

HBM4 continues to leverage the vertically stacked DRAM chip architecture, a hallmark of the HBM series, offering remarkable improvements in bandwidth, efficiency, and design flexibility over its predecessor, HBM3. The standard supports transfer rates reaching up to 8 Gb/s over a 2048-bit interface, delivering an impressive 2 TB/s of total bandwidth.

A noteworthy upgrade is the increase in the number of independent channels per stack, expanding from 16 in HBM3 to 32 in HBM4. Each channel now includes two pseudo-channels, enabling greater access flexibility and parallelism in memory operations.

In terms of power efficiency, the JESD270-4 standard supports a variety of voltage levels to accommodate different system requirements, including options for 0.7V, 0.75V, 0.8V, or 0.9V VDDQ and 1.0V or 1.05V VDDC. These configurations contribute to lower power consumption and enhanced energy efficiency. HBM4 maintains backward compatibility with existing HBM3 controllers, allowing a single controller to manage both memory standards, thereby simplifying integration and enabling more versatile system designs.

Additionally, HBM4 incorporates Directed Refresh Management (DRFM), which enhances row-hammer mitigation. On the capacity front, HBM4 supports stack configurations ranging from 4s to 16s, with DRAM chip densities available in 24Gb or 32Gb. The 32Gb 16-cube stacks offer cube capacities up to 64GB, providing higher memory density for demanding applications.

A notable architectural innovation in HBM4 is the separation of instruction and data paths, designed to increase concurrency and reduce latency. This enhancement is tailored to boost performance in AI and HPC workloads where multi-channel processing is prevalent, and is supported by improvements in the physical interface and signal integrity.

The development of HBM4 was a collaborative effort involving major industry players such as Samsung, Micron, and SK hynix. These companies are expected to begin showcasing HBM4-compatible products soon, with Samsung indicating plans to commence production in 2025 to meet the rising demand from AI chipmakers and hyperscalers.

As AI models and HPC applications increasingly require more computational resources, the demand for memory with higher bandwidth and greater capacity continues to grow. The introduction of the HBM4 standard aims to address these needs by laying the groundwork for the next generation of memory technologies, designed to tackle the data throughput and computational challenges associated with these workloads.

SİGORTA

Az önceSİGORTA

23 saat önceSİGORTA

2 gün önceSİGORTA

2 gün önceSİGORTA

3 gün önceSİGORTA

4 gün önceSİGORTA

4 gün önceSİGORTA

7 gün önceSİGORTA

7 gün önceSİGORTA

8 gün önce 1

DJI Mini 5: A Leap Forward in Drone Technology

20090 kez okundu

1

DJI Mini 5: A Leap Forward in Drone Technology

20090 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

14102 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

14102 kez okundu

3

7 Essential Foods for Optimal Brain Health

12971 kez okundu

3

7 Essential Foods for Optimal Brain Health

12971 kez okundu

4

Elon Musk’s Father: “Admiring Putin is Only Natural”

12816 kez okundu

4

Elon Musk’s Father: “Admiring Putin is Only Natural”

12816 kez okundu

5

Minnesota’s Proposed Lifeline Auto Insurance Program

10691 kez okundu

5

Minnesota’s Proposed Lifeline Auto Insurance Program

10691 kez okundu

Sigorta Güncel Sigorta Şikayet Güvence Haber Hasar Onarım Insurance News Ajans Sigorta Sigorta Kampanya Sigorta Ajansı Sigorta Sondakika Insurance News