China’s technology giant Alibaba has recently unveiled its latest innovation in artificial intelligence, the Qwen3 model. This AI family, characterized by its hybrid structure, is designed to compete with major US-based players like OpenAI and Google, while also embracing an open-source philosophy.

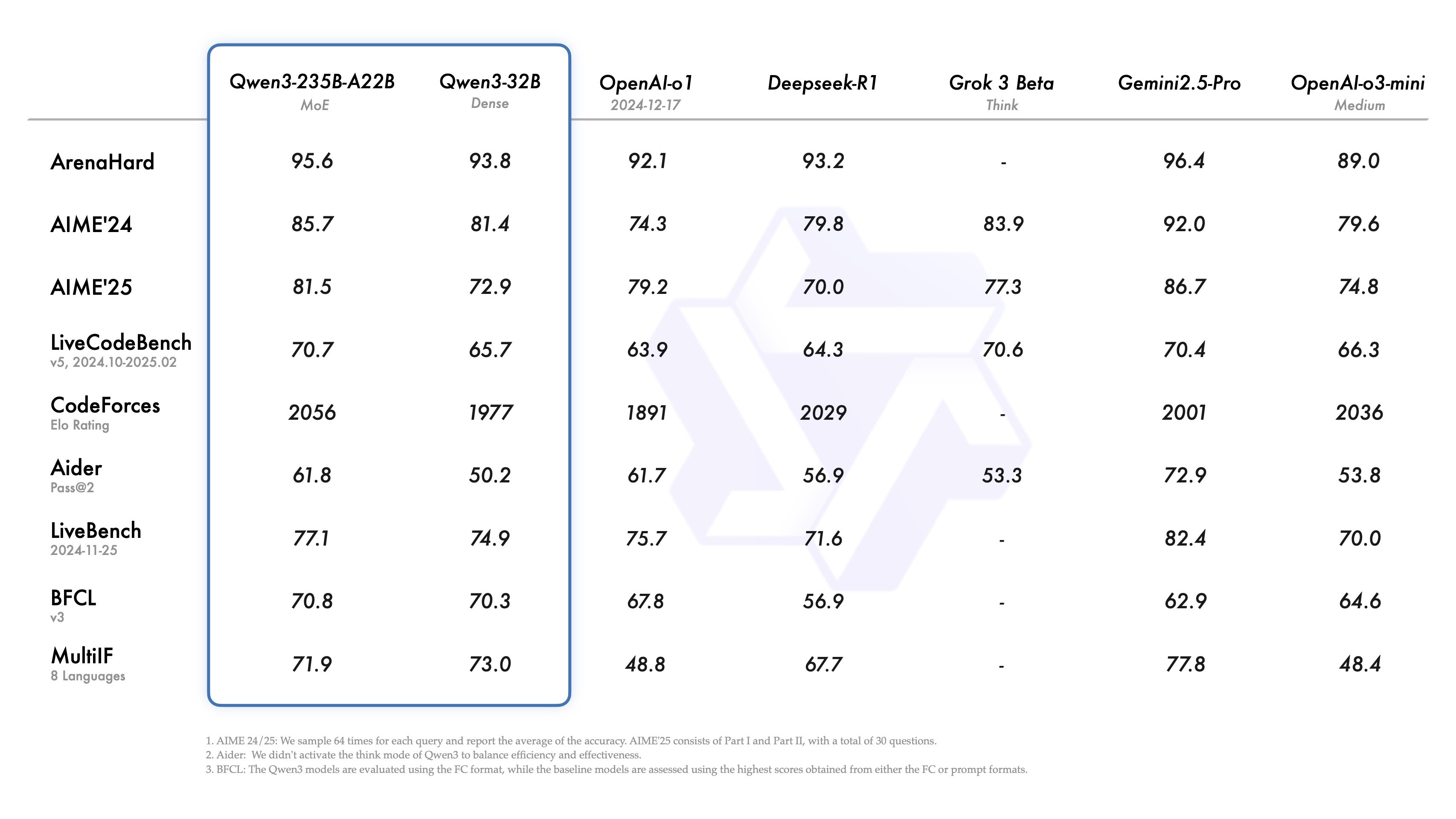

The Qwen3 family is comprised of a wide array of models with parameters ranging from 600 million to an impressive 235 billion. The parameter count is a crucial metric that directly impacts an AI model’s problem-solving capabilities. According to Alibaba, the Qwen-3-235B-A22B, one of its largest models, has outperformed OpenAI’s o3-mini and Google’s Gemini 2.5 Pro in specific benchmarks. Although these Qwen3 models may not yet surpass OpenAI’s latest high-end models like the o3 and o4-mini, they still demonstrate robust performance. Notably, the most powerful Qwen3 model is not yet publicly accessible. However, models like the Qwen3-32B are available for download on platforms such as Hugging Face and GitHub. Additionally, Qwen3 models can be accessed via cloud services like Fireworks AI and Hyperbolic.

One of the standout features of the Qwen3 models is their “hybrid” configuration. This setup allows the model to engage in deeper thought processes for complex problems while providing rapid responses to simpler queries, effectively enabling the model to “think when necessary.” Users have the flexibility to dictate the extent of this computational process, described by Alibaba engineers as “control of the thinking budget.” Such solutions have begun to emerge in recent times. Furthermore, certain Qwen3 models employ a “Mixture of Experts” (MoE) architecture. This architecture decomposes tasks into sub-tasks, distributing them among expert sub-models to enhance decision-making efficiency and decentralization.

Alibaba reports that Qwen3 offers support for 119 languages and has been trained with approximately 36 trillion tokens (tokens are the raw data bits a model processes; 1 million tokens is roughly equivalent to 750,000 words). The training data encompasses textbooks, Q&A pairs, software code, and AI-generated datasets. This comprehensive database enables Qwen3 to excel not only in general knowledge inquiries but also in mathematics and software tests. For instance, it surpasses OpenAI’s o1 in software benchmarks like LiveCodeBench. Alibaba claims that Qwen3 excels in ride-hailing capabilities, as well as in adhering to instructions and replicating specific data formats.

Sigortahaber.com, sigorta sektöründeki en güncel haberleri, analizleri ve gelişmeleri tarafsız bir bakış açısıyla sunan bağımsız bir haber platformudur. Sigorta profesyonellerine, acentelere ve sektöre ilgi duyan herkese doğru, hızlı ve güvenilir bilgi sağlamayı amaçlıyoruz. Sigortacılıktaki yenilikleri, mevzuat değişikliklerini ve sektör trendlerini yakından takip ederek, okuyucularımıza kapsamlı bir bilgi kaynağı sunuyoruz.

Yorum Yap